Qk V

Q K 62 likes Página oficial de QK Venta Online Artículos con onda al mejor precio Lunes a sábado de 9 a 19 hs.

Qk v. @ A B C D % E F G H A!. TIME 2 Core Flexible Cable GoodHome Colenso 00W White OSCILLATING Fan Heater GoodHome Colenso 0W white FREE STANDING fan heater Masterlite PP 400W Random Orbit sander Cooke & Lewis dishwashers Erbauer ERO450 random orbit sander Whoosh ceiling fans Hotpoint, Indesit, Creda, Swan and Proline branded tumble dryers Telamon LED. 5/10/21 · 21/5/ 「京のれん 豆腐つゆ 0ml」原材料表示に関するお詫びとお知らせ 21/5/10 「今月の白だし」 (5月) を追加しました。 21/4/12 弊社社員の新型コロナウイルス感染について.

K sp = 2 = 161 x 105 Top Calculating the Solubility of an Ionic Compound in Pure Water from its K sp Example Estimate the solubility of Ag 2 CrO 4 in pure water if the solubility product constant for silver chromate is 11 x 1012 Write the equation and the. Check out Evan's new book, Built to Serve, at http//evancarmichaelcom/book In today's video, you will learn about the 7 best lessons from Elon Musk, Jeff B. For which process is.

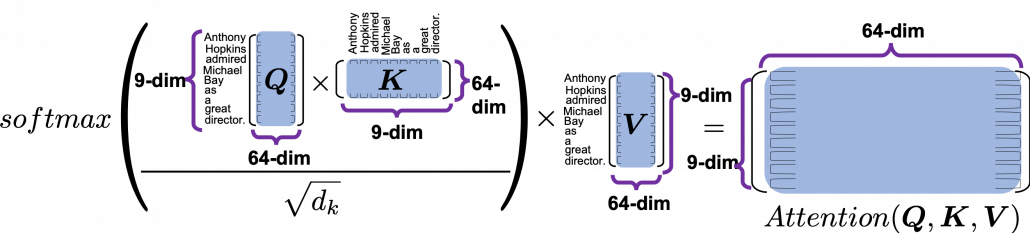

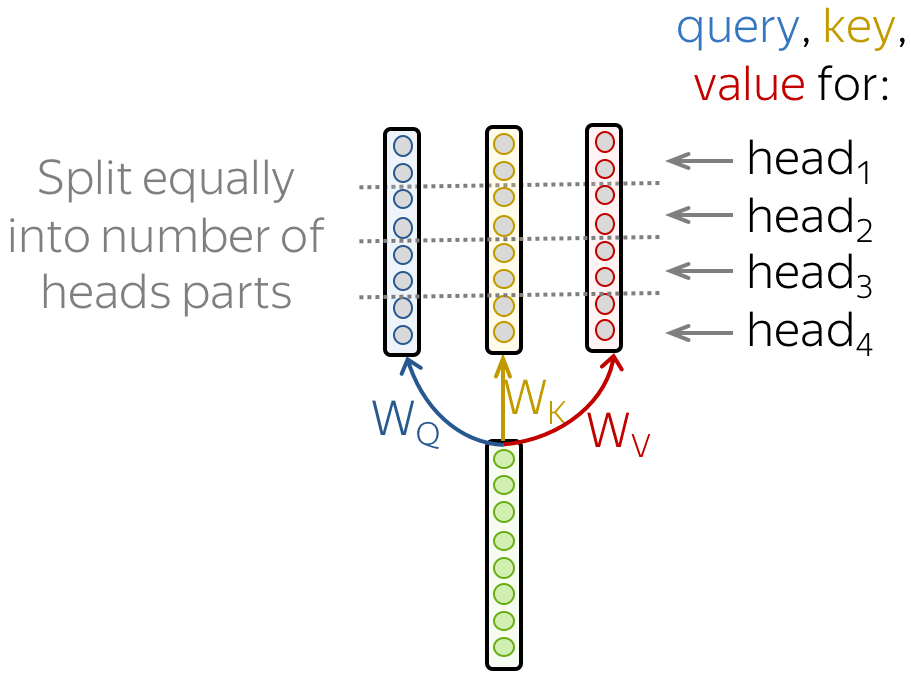

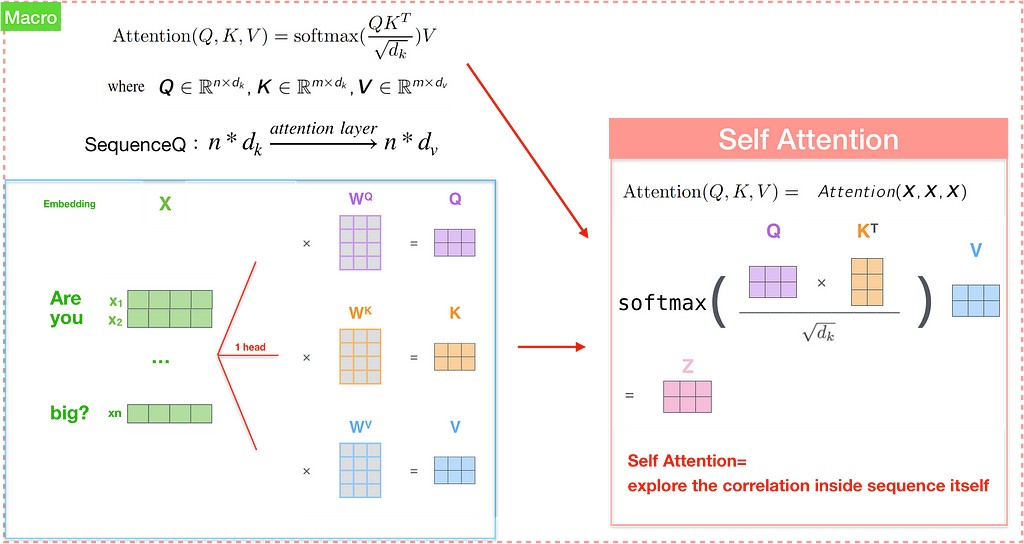

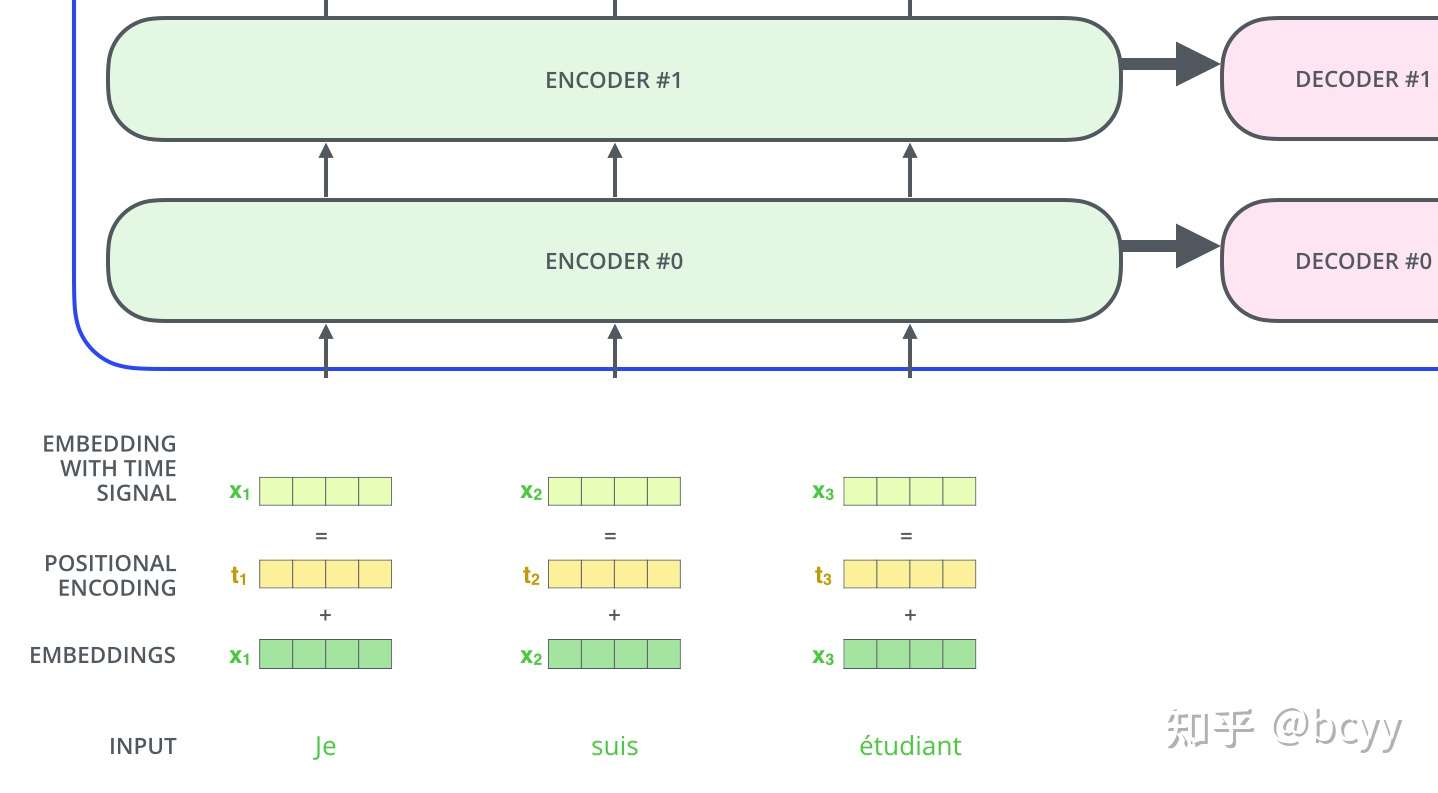

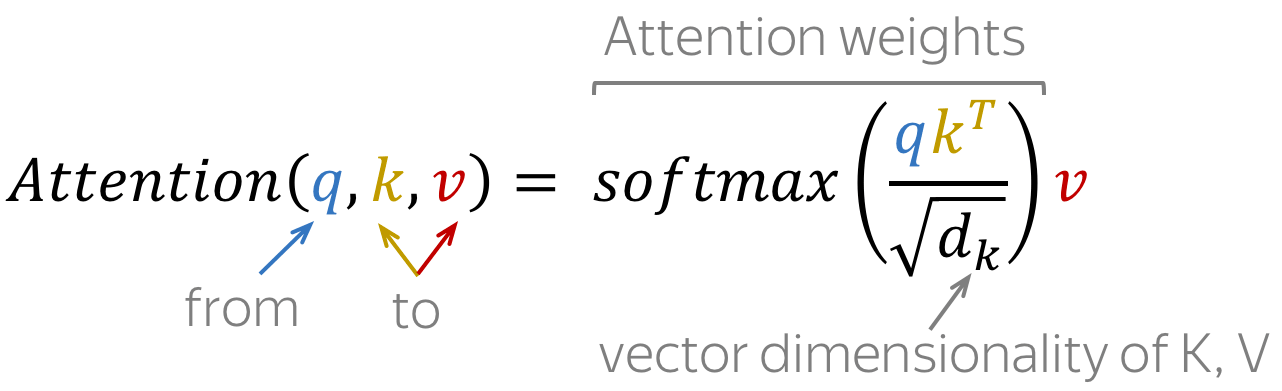

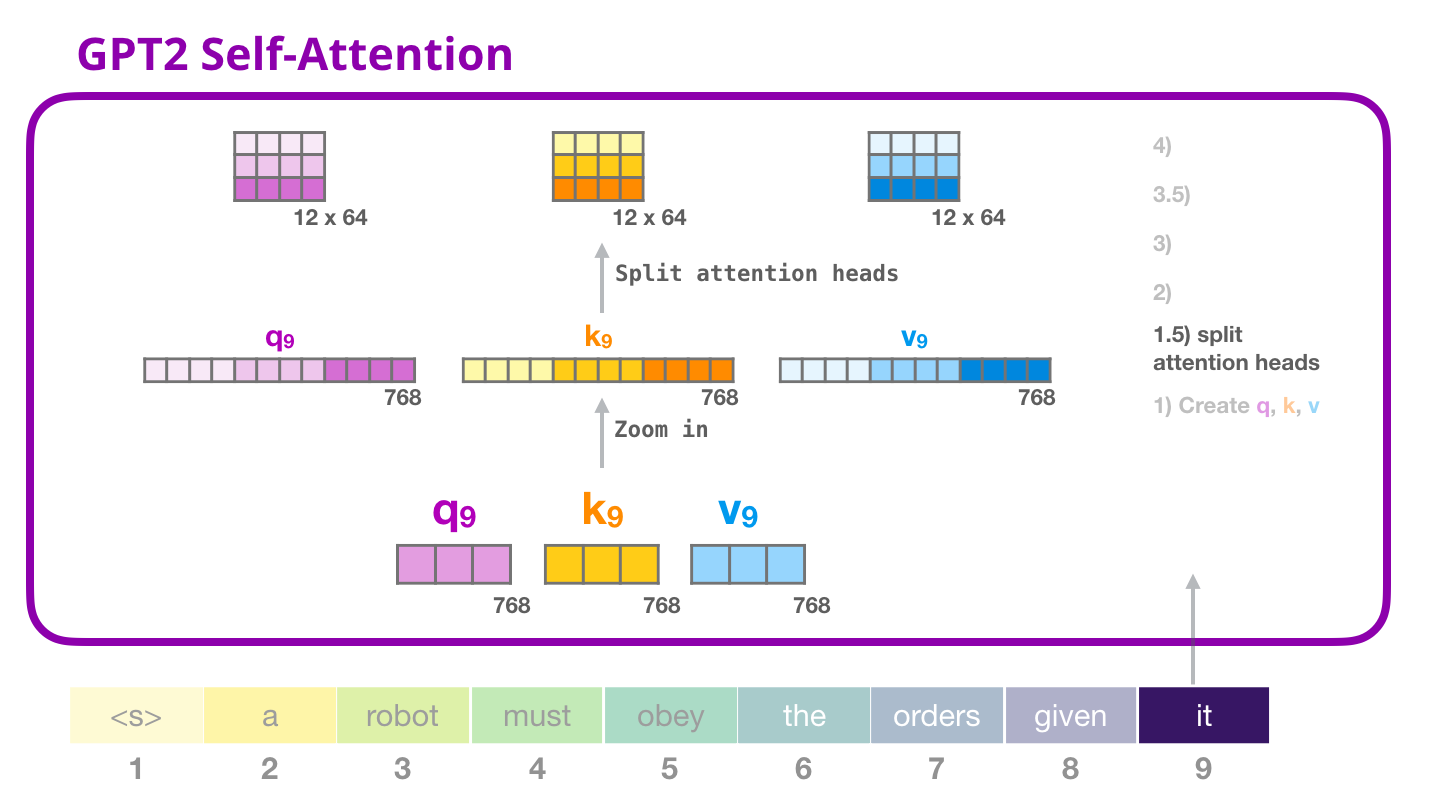

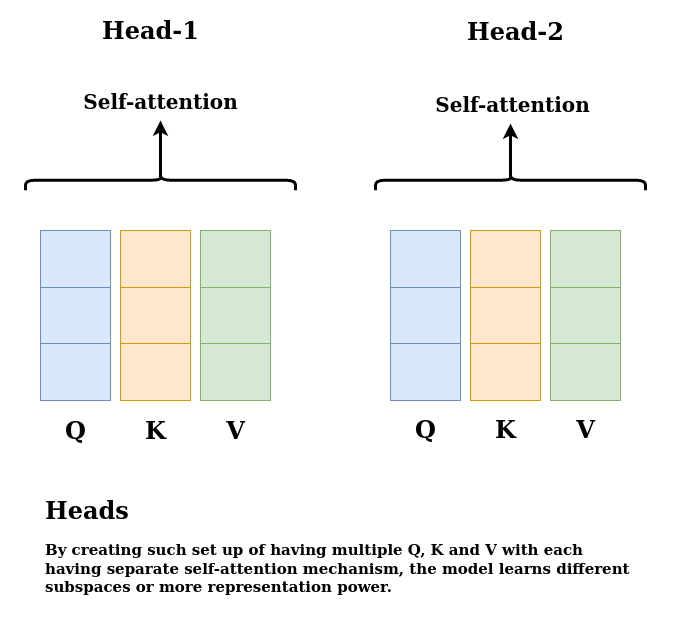

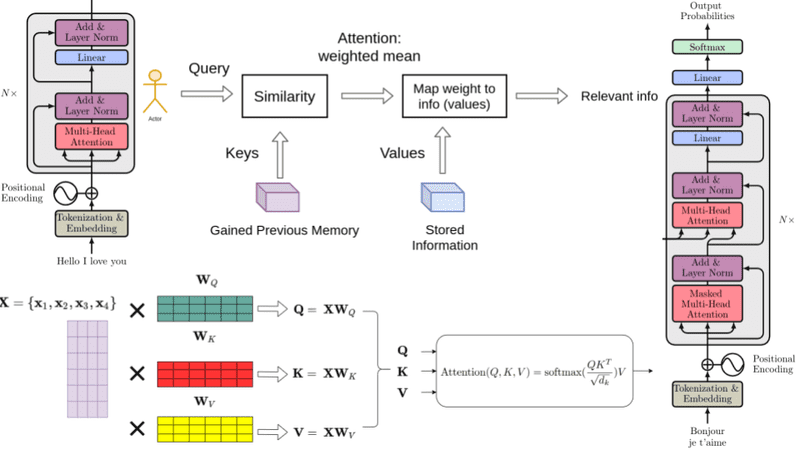

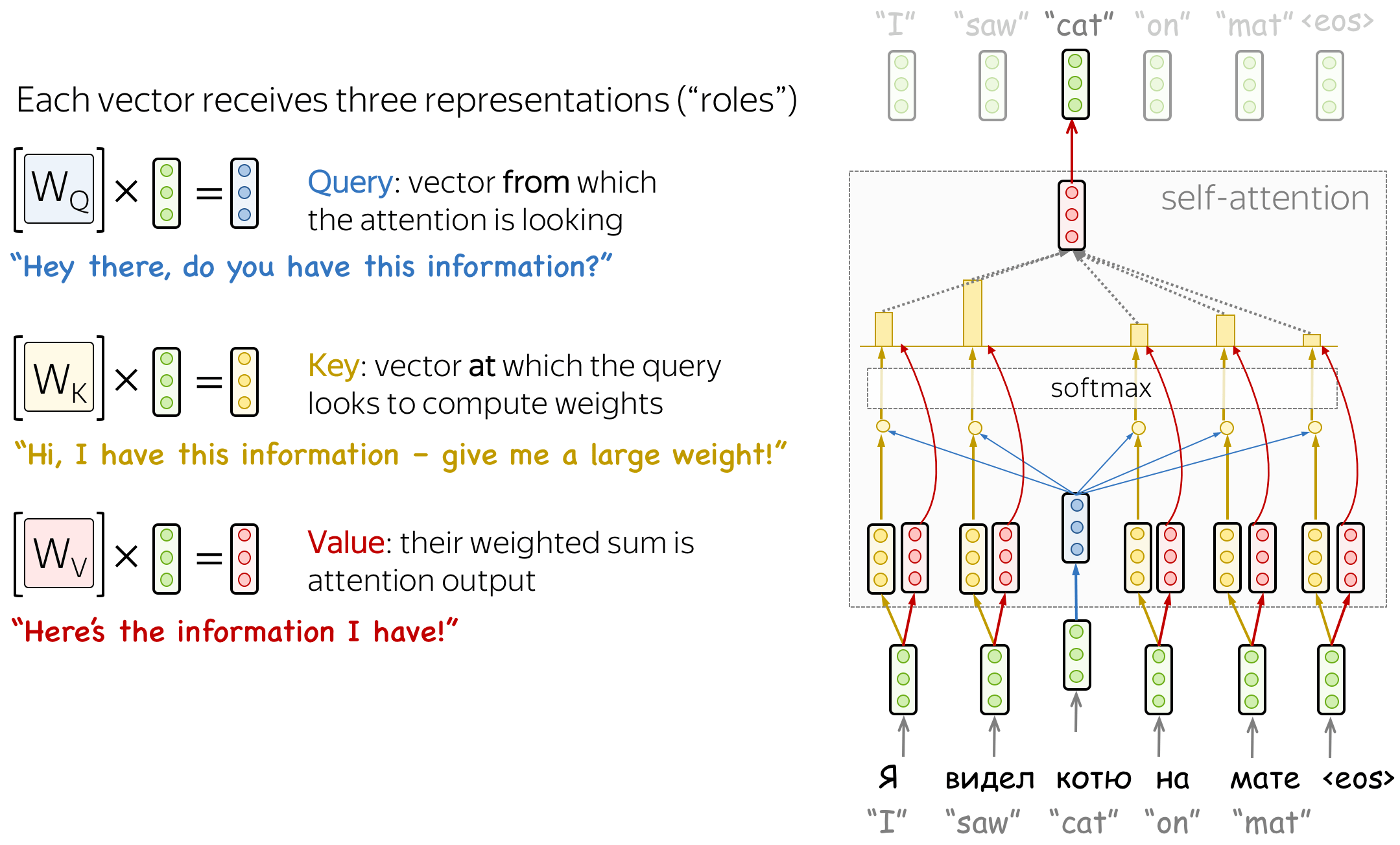

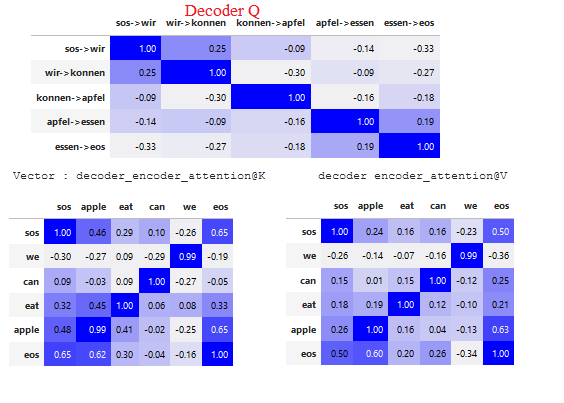

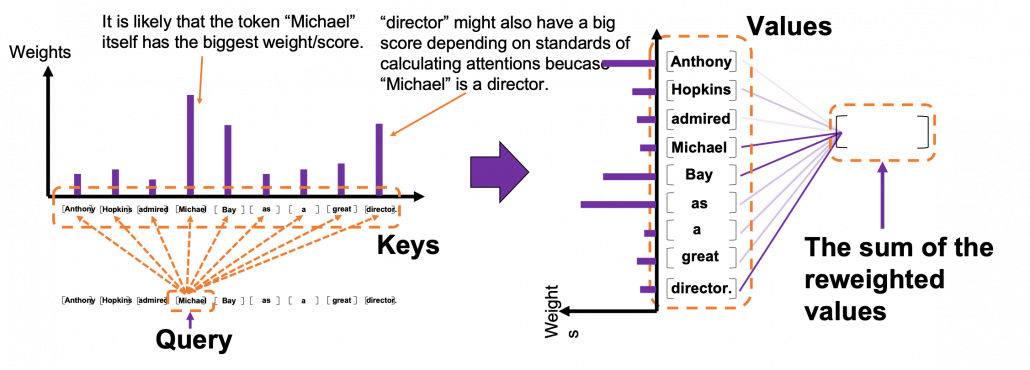

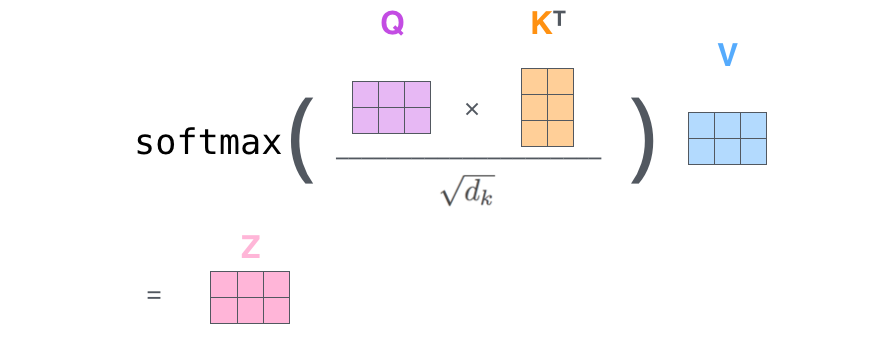

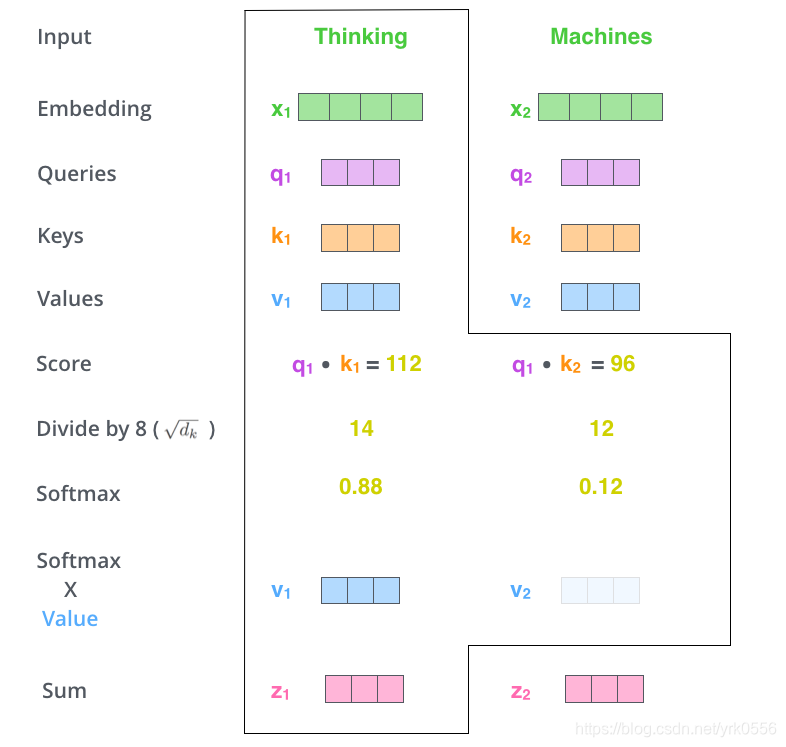

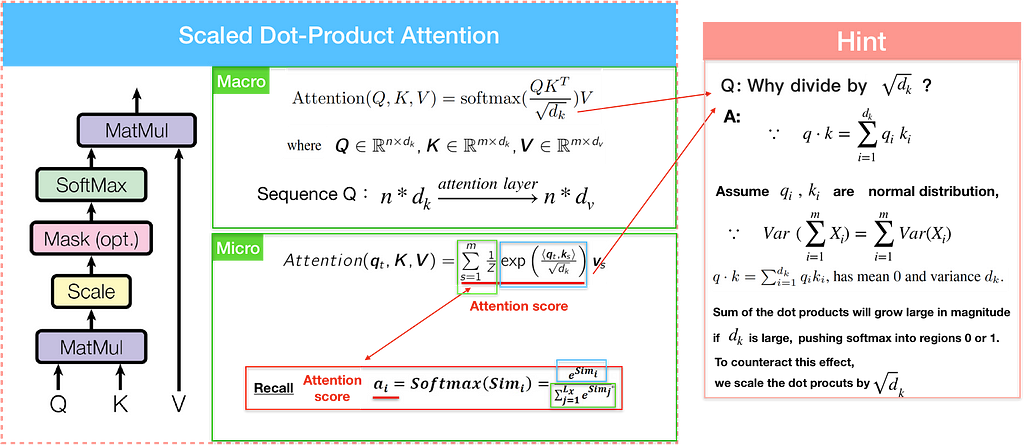

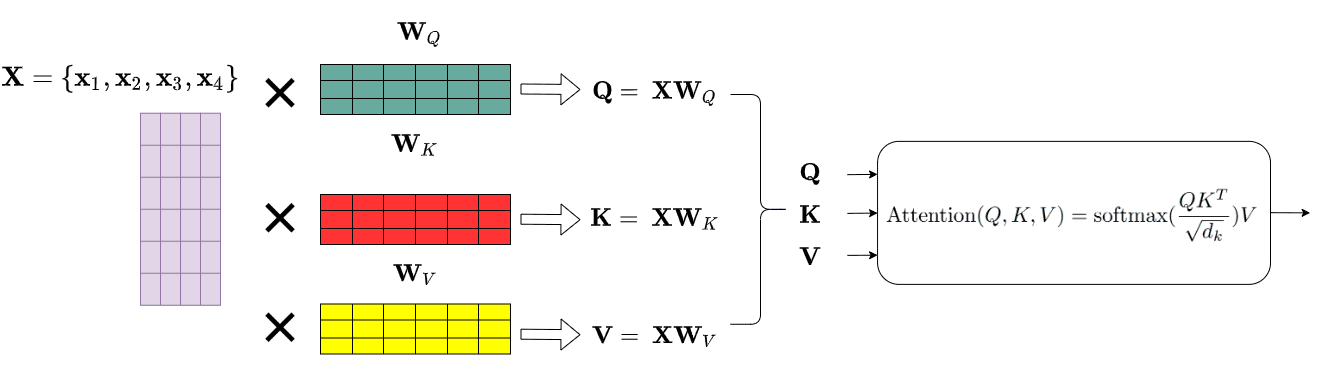

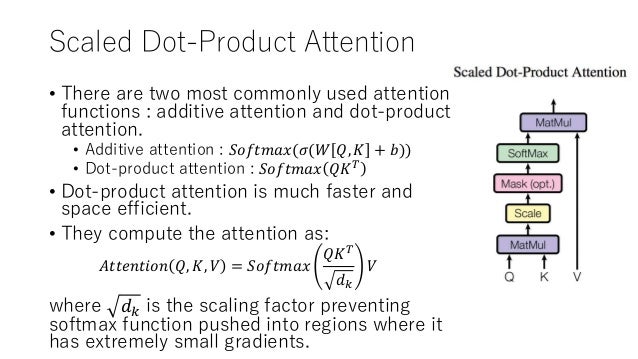

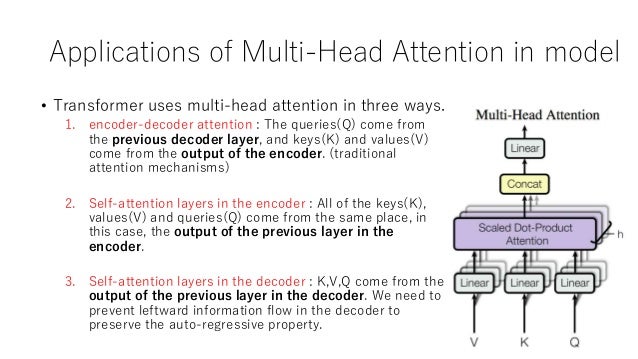

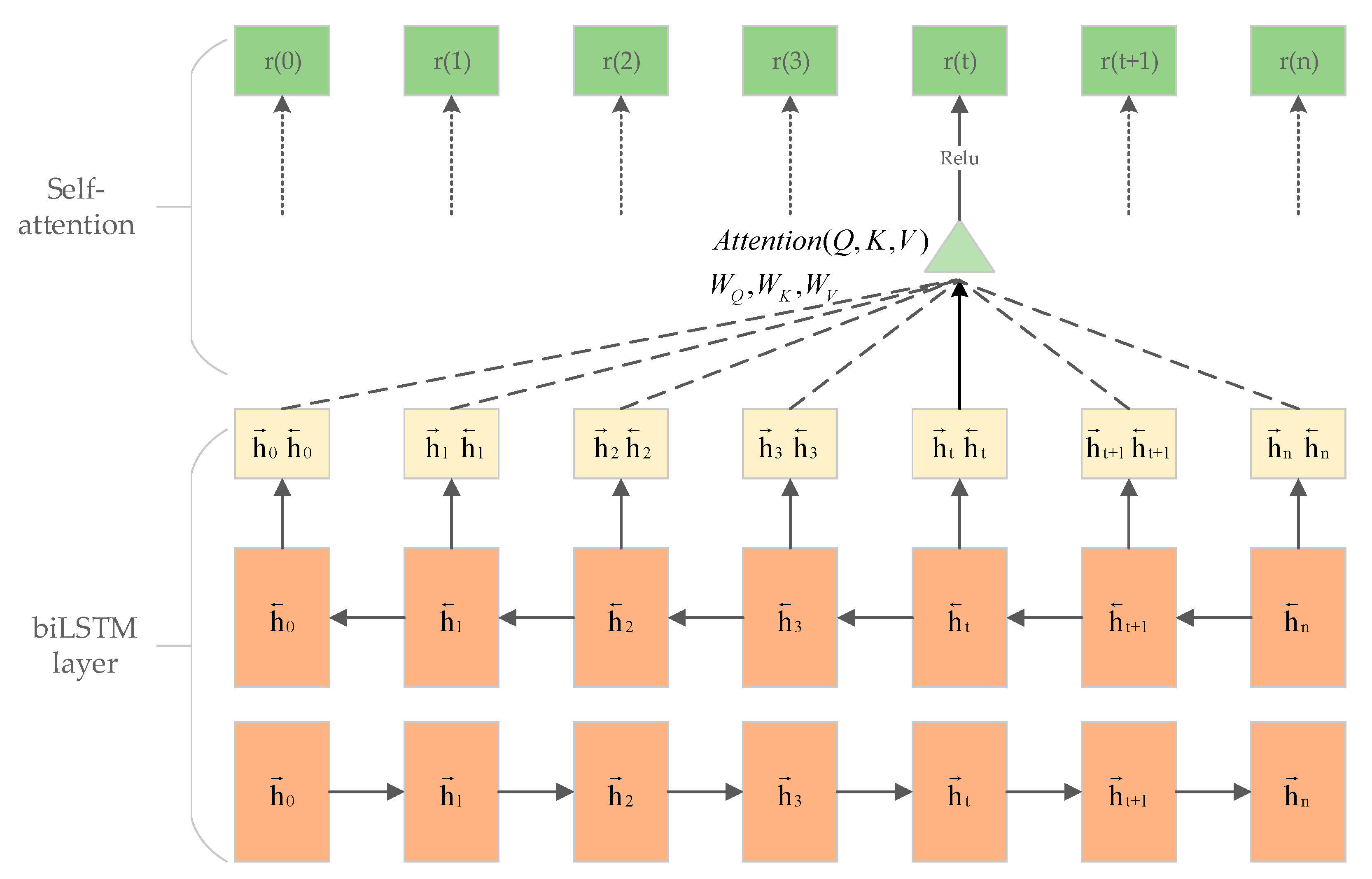

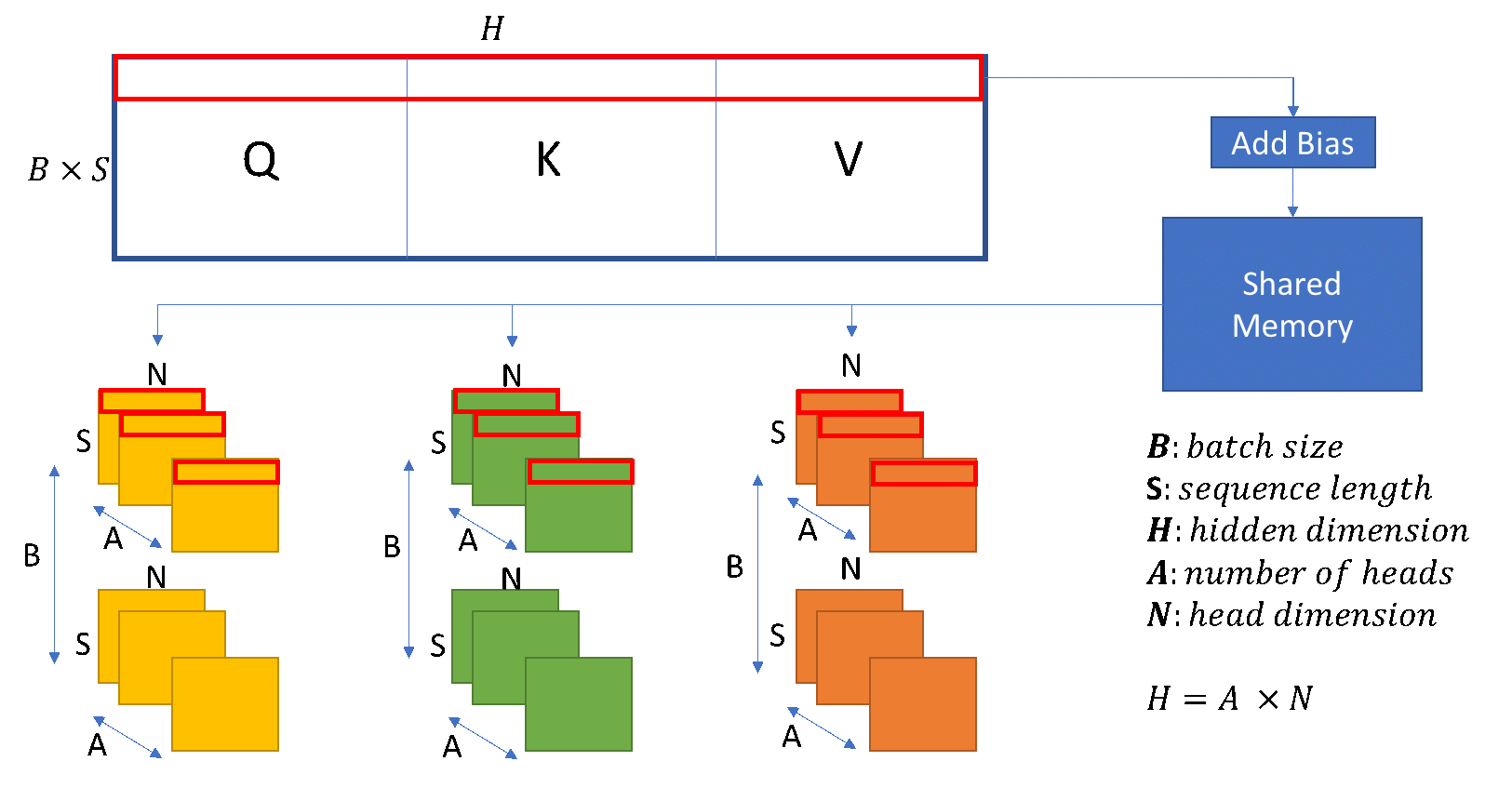

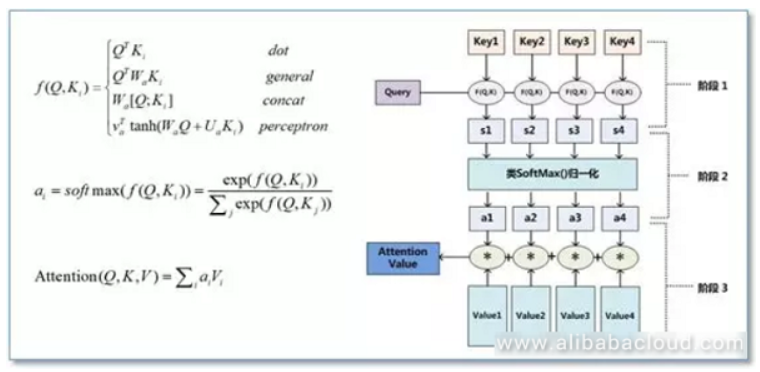

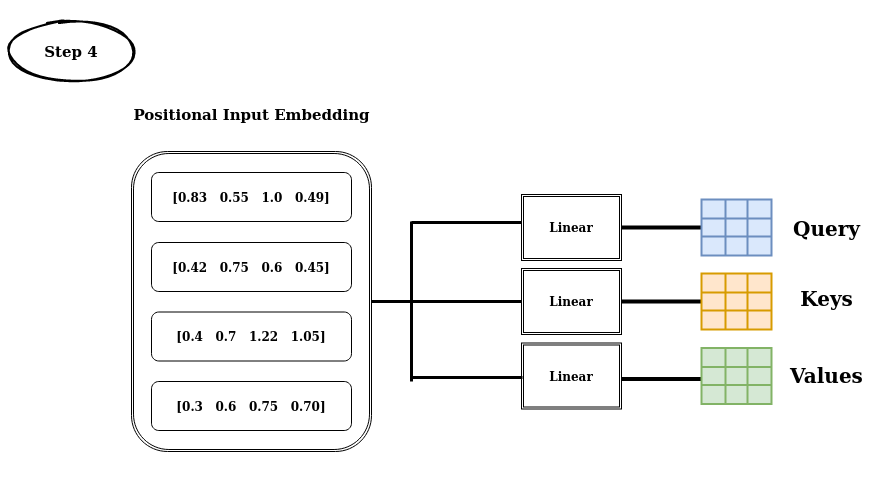

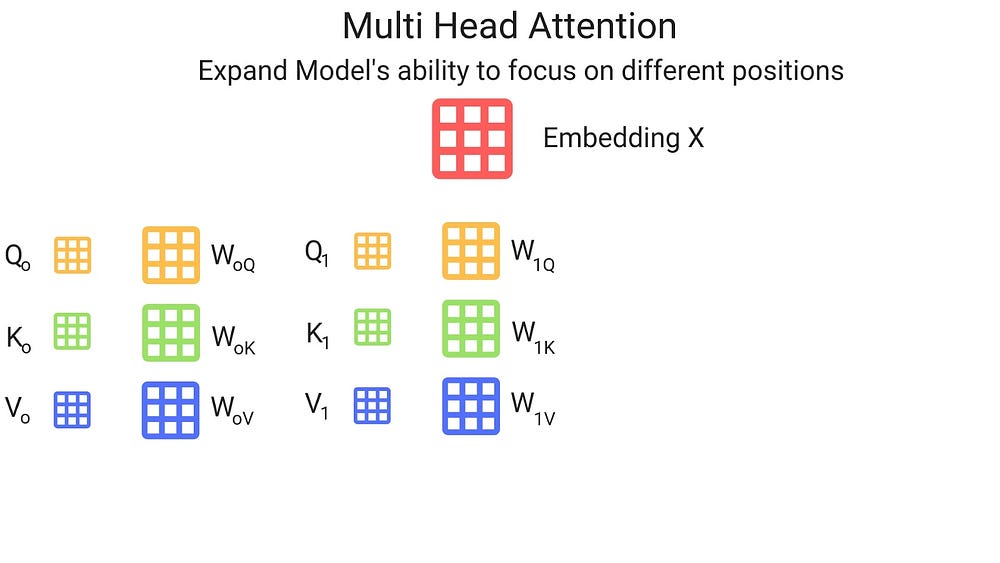

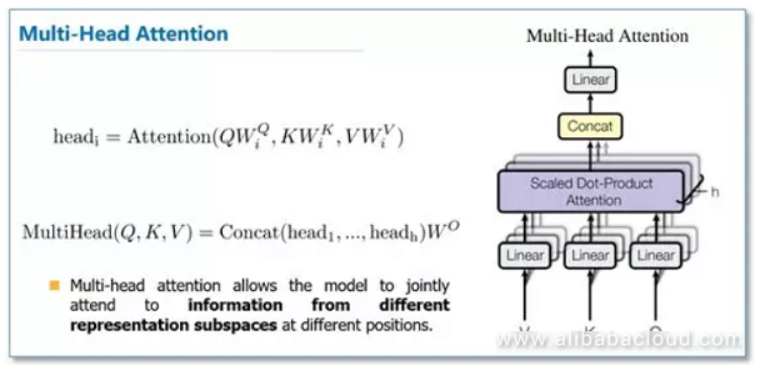

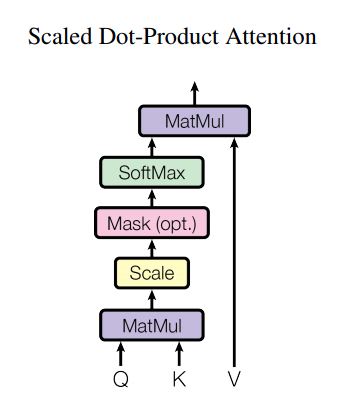

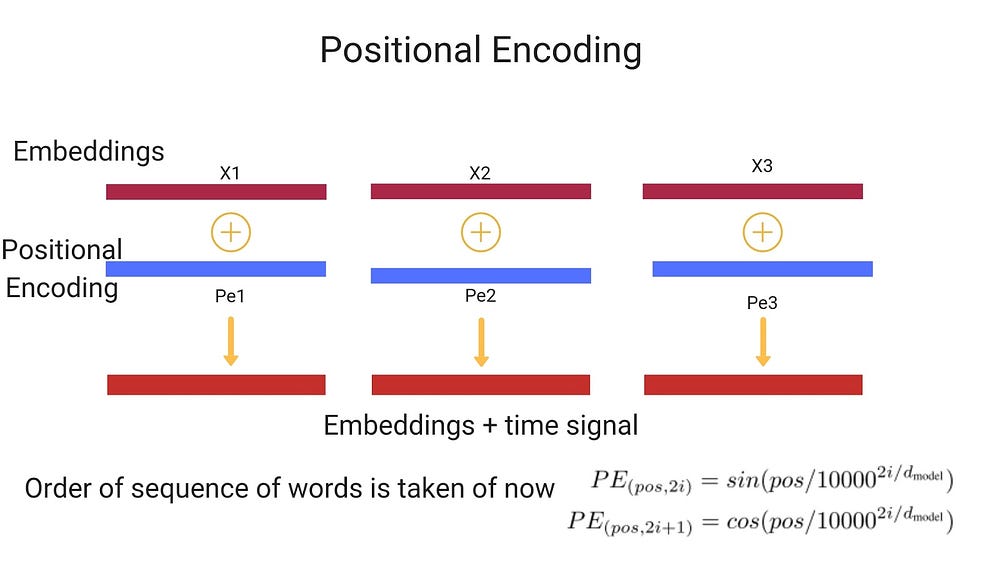

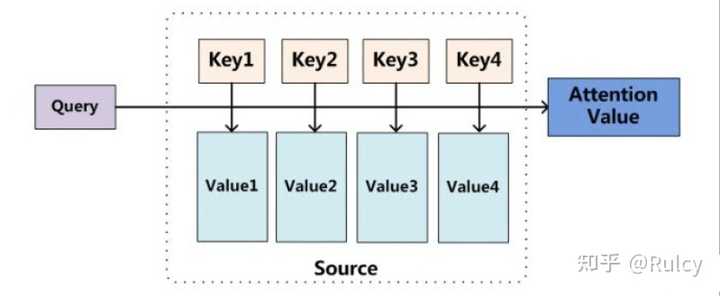

K&G Fashion Superstore for Men's and Women's Clothing, Childrens', Shoes, & Accessories Choose from Suits, Dress Shirts, Ties, & more in regular, big and tall, & plus sizes. The Etruscans used Q in conjunction with V to represent /kʷ/, and this usage was copied by the Romans with the rest of their alphabet In the earliest Latin inscriptions, the letters C, K and Q were all used to represent the two sounds /k/ and /ɡ/, which were not differentiated in writing. 7/26/ · 在自注意力中,不妨理解为Q=K=V=X(输入),而实际上这4个并不相等,但Q,K,V都是输入X通过线性变换得来的。我后续有更好的理解会再写一篇文章描述。这是我的见解,如有不对的地方恳请指正 《Attention Is All You Need》注意力机制公式中Q,K,V的理解.

Finden Sie Mode, Schuhe, Sportbekleidung und Tracht für Damen, Herren und Kinder im K&L OnlineShop und bestellen Sie bequem auf Rechnung Kostenlose Lieferung in Ihre WunschFiliale. Coinflipping example, a Bernoulli rv is sometimes referred to as a coin flip, where p is the probability of landing heads Observing the outcome of a Bernoulli rv is sometimes called performing a Bernoulli trial, or experiment Keeping in the spirit of (1) we denote a Bernoulli p rv. Q K V a F q K V w F Sternolophus rufipes ށF ߑ 偄 j L j b ځ J u g V ځ K V.

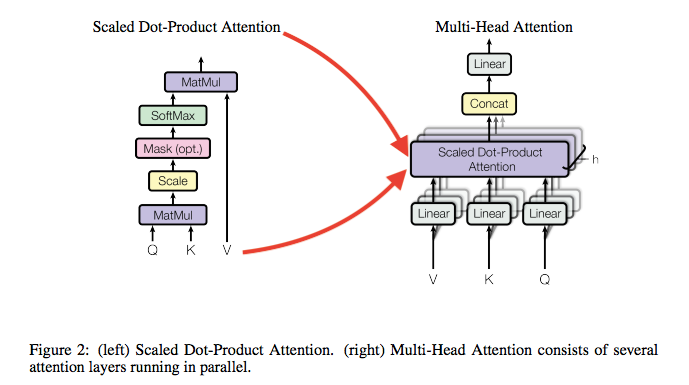

View 71rttxt from ACCCOUNTIN FINANCAILA at George Brown College Canada def multihead_attn(q, k, v) # q, k, v have shape batch, heads, sequence, features w = tf. " # $ % & ’ (!"#$%&’()*!" # $ % & ’ * , / 0 1 % & 2 3 4 5 6 7 8 9;. Meille upeille XLnaisille edullisesti vuodesta 08!.

Transfomer的selfattention里面的Q, K, V的含义,不了解transfomer和selfattention的请先去看看知乎其他的回答。 最近看了很多这个方面的帖子,大家都会说是增加了网络的能力,但是没有怎么说清楚为. A b c d e f g h i j k l m n Ñ o p q r s t u v w x y z a b. Fourth, substitute the equilibrium concentrations into the equilibrium expression and solve for K sp;.

V V dT q C ⎟ ⎠ ⎞ ⎜ ⎝ ⎛δ = P P dT q C ⎟ ⎠ ⎞ ⎜ ⎝ ⎛δ = heat capacity at constant volume heat capacity at constant pressure CP is always greater than CVWhy?. Hint The difference between CP and Cv is very small for solids and liquids, but large for gases MSE 90 Introduction to Materials Science Chapter 19, Thermal. On a pv diagram for a closedsystem sketch the thermodynamic paths that the system would follow if expanding from volume = v 1 to volume = v 2 by isothermal and quasistatic, adiabatic processes For which process is the most work done by the system?.

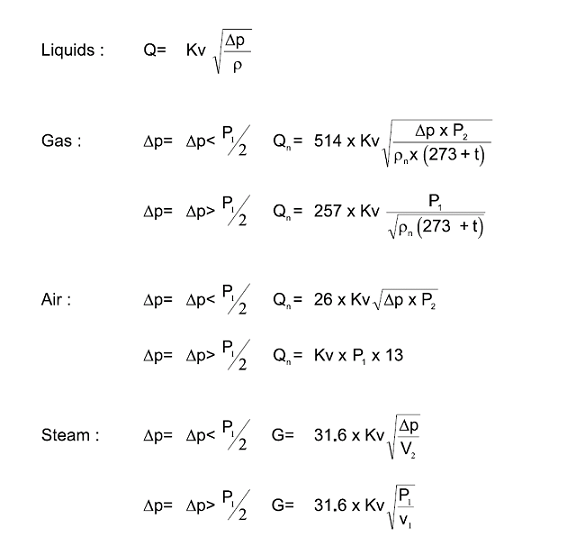

Personal_finance Name Pamela Vernon Date personal finance L Q B Z A L Q Y I K C O T S Z X Q K S Q E S C Q F O Z N Q V D N U F L A U T U M J R H G I N personal_finance Name Pamela Vernon Date personal finance. K, or k, is the eleventh letter of the modern English alphabet and the ISO basic Latin alphabetIts name in English is kay (pronounced / ˈ k eɪ /), plural kays The letter K usually represents the voiceless velar plosive. A Area C Discharge coefficient d density ( water density = 1000kg/m³ ) D Diameter g Gravity 98 m/s² K Flow coefficient (Kv)(Cv) Q Flow rate.

F Z V q k O b Ūk v z U P ǡA j a n I ѧڭ̯ b Ľõ v j a A ӽͽ͡m F Z V n C o ѬO ڦb G Q A 豵IJ k A Ĥ@ ѡF ڪ v T D ` j A i H v T F ڤ@ ͡C o ѡA ڹ復 D ` R n A ` `Ū w A L ֹM. Founded in California in 1966, KSwiss is a heritage American tennis brand, known for oncourt performance and offcourt style Shop KSwiss shoes now!. 10/27/ · Q = x * Wq K = x * Wk V = x * Wv x对应信息V的注意力权重 与 Q*Ktranpose 成正比 等于说:x的注意力权重,由x自己来决定,所以叫自注意力。 Wq,Wk,Wv会根据任务目标更新变化,保证了自注意力机制的效果。 以下是点乘自注意力机制的公式.

Title PowerPoint Presentation Author jalbert Created Date 5//21 PM. " # $ I J K L A M N O P Q R S T A U V 5 W E X Y Z A. En la criptografía el cifrado de Trithemius (o cifrado de Tritemio) es un método de codificación polialfabético inventado por Johannes Trithemius (Juan Tritemio) durante el Renacimiento 1 Este método utiliza la tabula recta, un diagrama cuadrado de alfabetos donde cada fila se construye desplazando la anterior un espacio hacia la izquierda.

First Law of Thermodynamics The first law of thermodynamics is the application of the conservation of energy principle to heat and thermodynamic processes The first law makes use of the key concepts of internal energy, heat, and system workIt is used extensively in the discussion of heat enginesThe standard unit for all these quantities would be the joule, although they are. Le Scrabble® est une marque déposée, sont détenteurs des droits Hasbro pour les ÉtatsUnis d'Amérique et le Canada, Mattel pour le reste du monde. Q»» , K p¦¼s v 1R ^ f s { z ä ¡ N w E § e w w E ø 9 8ÿ 9ú J ¬ t.

Capacitance is the ratio of the amount of electric charge stored on a conductor to a difference in electric potentialThere are two closely related notions of capacitance self capacitance and mutual capacitance 237–238 Any object that can be electrically charged exhibits self capacitanceIn this case the electric potential difference is measured between the object and ground. Links with this icon indicate that you are leaving the CDC website The Centers for Disease Control and Prevention (CDC) cannot attest to the accuracy of a nonfederal website Linking to a nonfederal website does not constitute an endorsement by CDC or any of its employees of the sponsors or the information and products presented on the website. View l/lk/kj/ lk/lk/kl/k/k’s profile on LinkedIn, the world’s largest professional community l/lk/kj/ has 1 job listed on their profile See the complete profile on LinkedIn and discover l/lk.

Preschoolers using writing tools on whiteboards are observed in order to practice observation and recording by identifying one skill ahead of time and quickl.

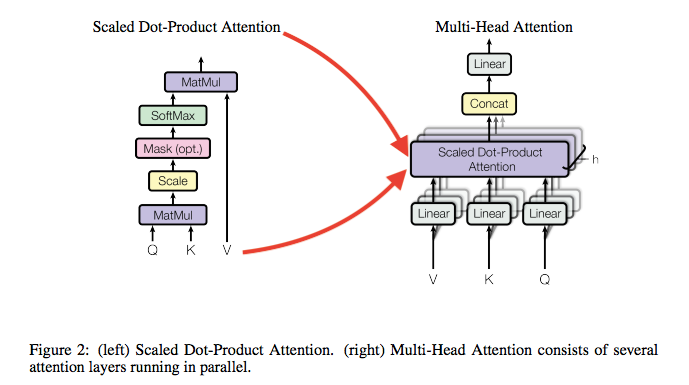

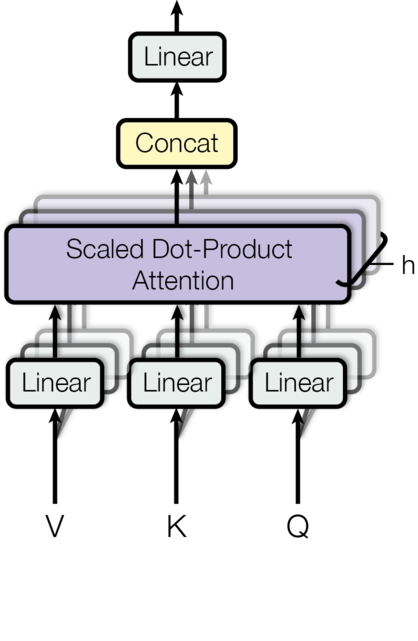

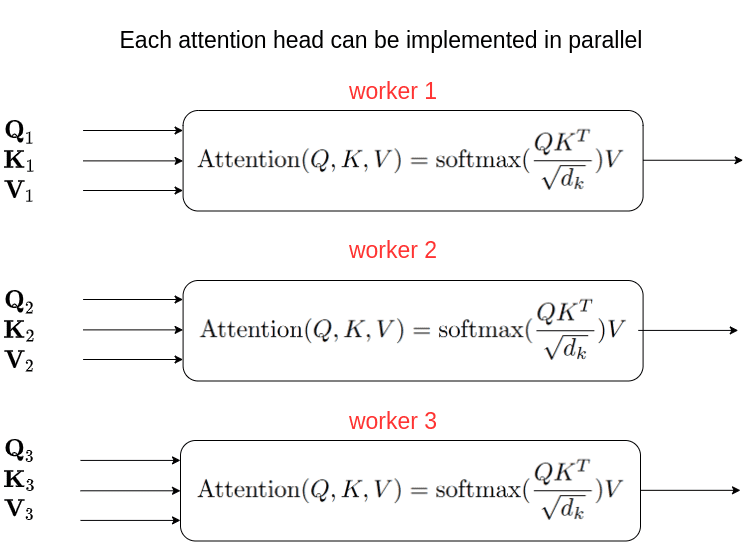

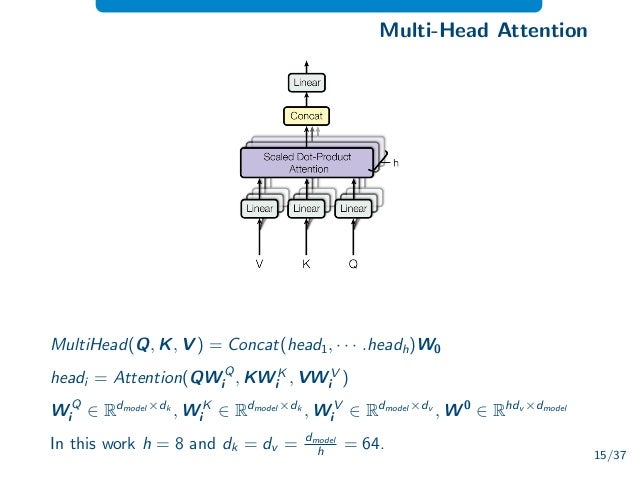

Multi Head Attention Mechanism Queries Keys And Values Over And Over Again Data Science Blog

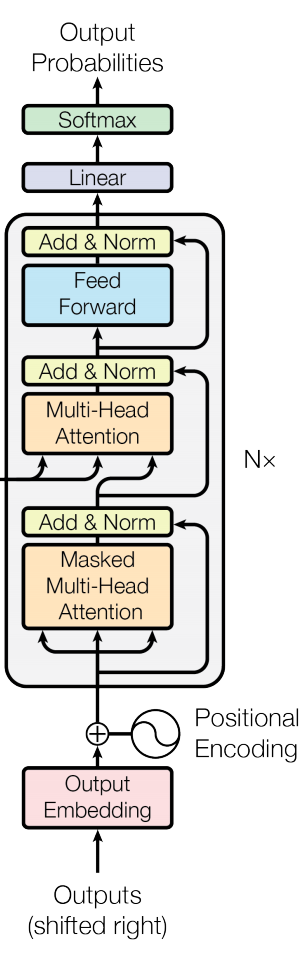

Transformer Ruochi Ai

Seq2seq And Attention

Qk V のギャラリー

How Transformers Work In Deep Learning And Nlp An Intuitive Introduction Ai Summer

Seq2seq Pay Attention To Self Attention Part 2 Laptrinhx

:quality(40)/f.eu1.jwwb.nl%2Fpublic%2Fq%2Fk%2Fv%2Ftemp-zyddgcchuqhitorksrst%2Fcs1e4o%2Fwalletchain41008.jpg)

Walletchains Modeaccessoires Feel Funky

Self Attention Transformer Network Coursera

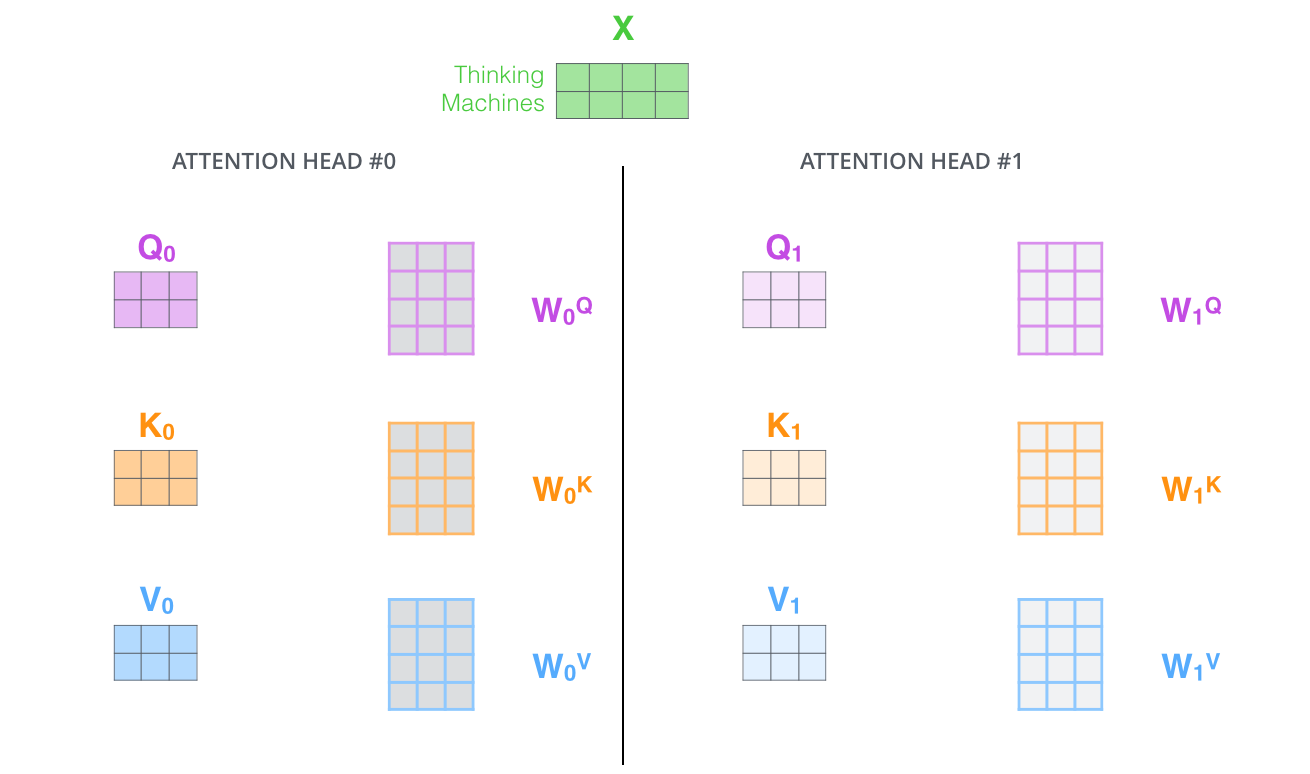

Where Are W Q W K And W V Matrices Coming From In Attention Model Cross Validated

Understanding Q K V In Transformer Self Attention By Mustafac Analytics Vidhya Medium

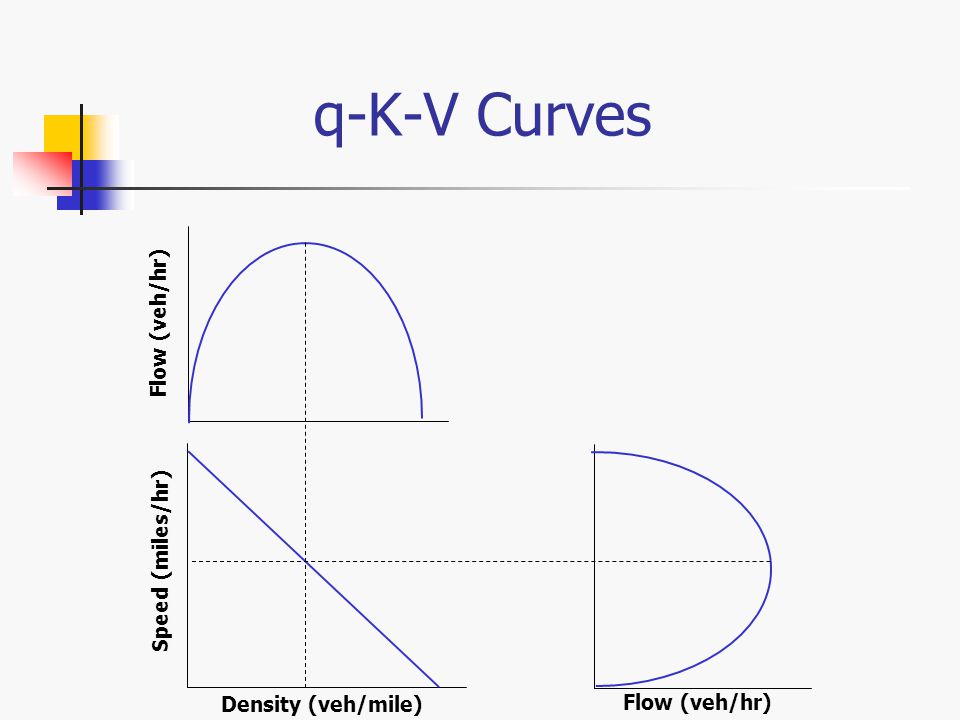

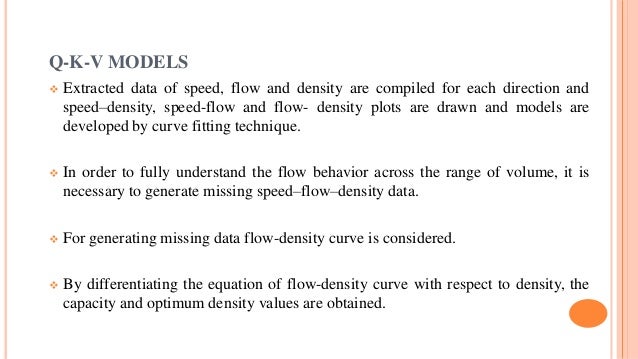

Ce 4640 Transportation Design Ppt Video Online Download

Why Does This Multiplication Of Q And K Have A Variance Of D K In Scaled Dot Product Attention Artificial Intelligence Stack Exchange

Transformer Models In Nlp Laptrinhx

Control Valve Relation Between Cv And Kv Cv 1 156kv

Deep Learning Nlp Transformer Model Programmer Sought

Replicate Your Friend With Transformer By Zuzanna Deutschman Chatbots Life

The Illustrated Transformer 知乎

Matrices K And V In The Decoder Part In The Transformer Model Cross Validated

Seq2seq And Attention

The Illustrated Gpt 2 Visualizing Transformer Language Models Jay Alammar Visualizing Machine Learning One Concept At A Time

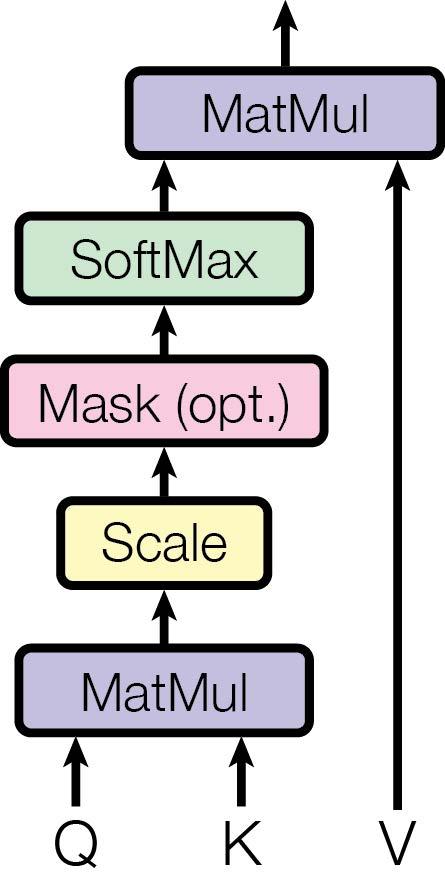

Python Self Attention Process Of Deep Learning Qkv

Transformers Visual Guide An Attempt To Understand Transformers By Mayur Jain Artificial Intelligence In Plain English

Attention Is All You Need Upc Reading Group 18 By Santi Pascual

Audio Visual Speech Recognition Models A Common Encoder The Visual Download Scientific Diagram

Self Attention In Nlp Geeksforgeeks

深度学习attention机制中的q K V分别是从哪来的 知乎

How Transformers Work In Deep Learning And Nlp An Intuitive Introduction Ai Summer

Lstm Is Dead Long Live Transformers By Jae Duk Seo Becoming Human Artificial Intelligence Magazine

Attention Is All You Need Prettyandnerdy

Transformer 模型详解 码农家园

Qu Est Ce Que Le Kv D Un Composant Hydraulique Caleffi

The Illustrated Transformer Jay Alammar Visualizing Machine Learning One Concept At A Time

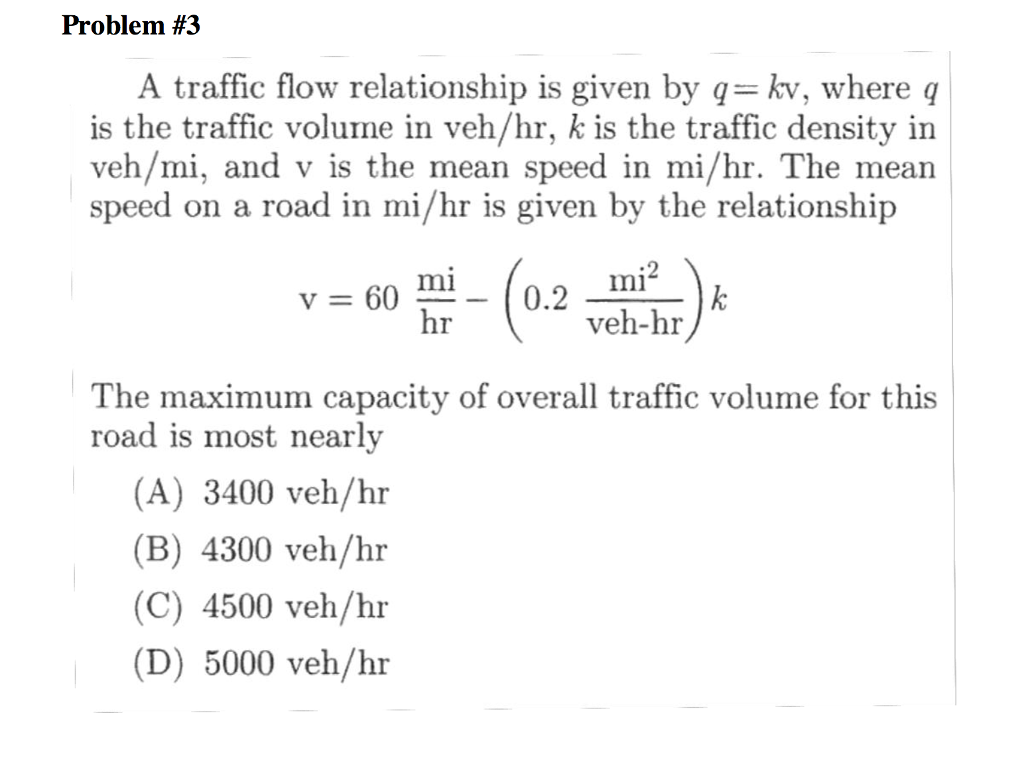

Solved Problem 3 A Traffic Flow Relationship Is Given By Chegg Com

How To Account For The No Of Parameters In The Multihead Self Attention Layer Of Bert Cross Validated

Lstm Is Dead Long Live Transformers By Jae Duk Seo Becoming Human Artificial Intelligence Magazine

Kv Ii Q Series Kit Modelbouwenzo Nl

Kv Ii Q Series Kit Modelbouwenzo Nl

Seq2seq And Attention

Python Self Attention Process Of Deep Learning Qkv

Transformer详解 Nocater S Blog

Q Link Opbouw Wandcontactdoos Ra Kv Dubbel Zwart Installand

Capacity Estimation Of Two Lane Undivided Highway

Understanding Q K V In Transformer Self Attention By Mustafac Analytics Vidhya Medium

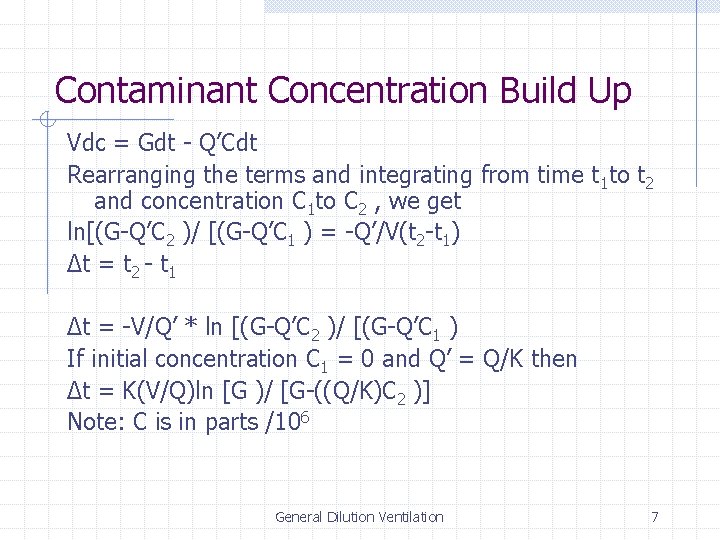

General Dilution Ventilation General Dilution Ventilation The Supply

Where Are W Q W K And W V Matrices Coming From In Attention Model Cross Validated

Understanding Q K V In Transformer Self Attention By Mustafac Analytics Vidhya Medium

What Exactly Are Keys Queries And Values In Attention Mechanisms Cross Validated

Attention Programmer Sought

Understand Self Attention In Bert Intuitively By Xu Liang Towards Data Science

Understanding Q K V In Transformer Self Attention By Mustafac Analytics Vidhya Medium

Multi Head Attention Mechanism Queries Keys And Values Over And Over Again Data Science Blog

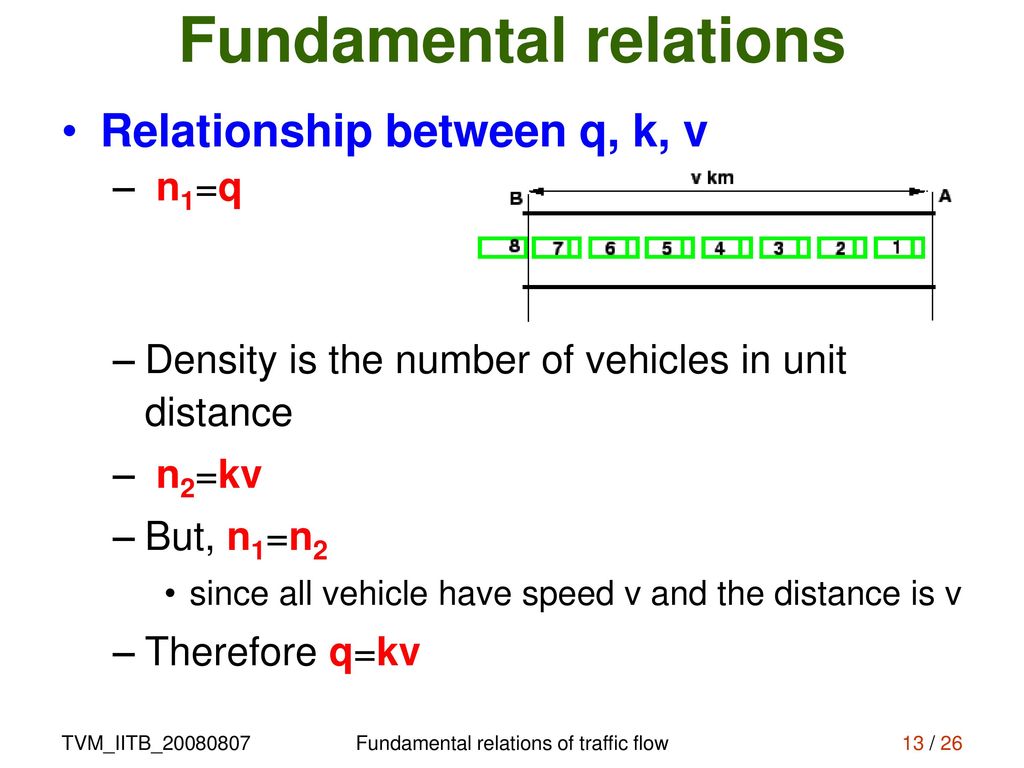

Fundamental Relations Of Traffic Flow Ppt Download

Attention Is All You Need Develop Paper

Trying To Understand Scaled Dot Product Attention For Transformer Architecture James D Mccaffrey

From Transformers To Performers Approximating Attention By Chiara Campagnola Towards Data Science

The Notations Used For Q K V And Download Scientific Diagram

Breaking Bert Down What Is Bert By Shreya Ghelani Towards Data Science

Stalen Deuren Binnenwerck Interieur

Python Self Attention Process Of Deep Learning Qkv

Transformer中q K V的理解 Yrk0556的博客 Csdn博客

:quality(40)/f.eu1.jwwb.nl%2Fpublic%2Fq%2Fk%2Fv%2Ftemp-zyddgcchuqhitorksrst%2Fjbhqr9%2Fworkshirt43003-1.jpg)

Workshirts Herenkleding Feel Funky

Attention Is All You Need Ppt Download

Seq2seq Pay Attention To Self Attention Part 2 Laptrinhx

:quality(40)/f.eu1.jwwb.nl%2Fpublic%2Fq%2Fk%2Fv%2Ftemp-zyddgcchuqhitorksrst%2F17bc6m%2Foorbellen10028-1.jpg)

Oorringen Van Rvs Oorbellen Sieraden Feel Funky

Understand Self Attention In Bert Intuitively By Xu Liang Towards Data Science

Flow Calculation Kv Beta Valve News

Transformer Multi Head Attention Programmer Sought

Transformer

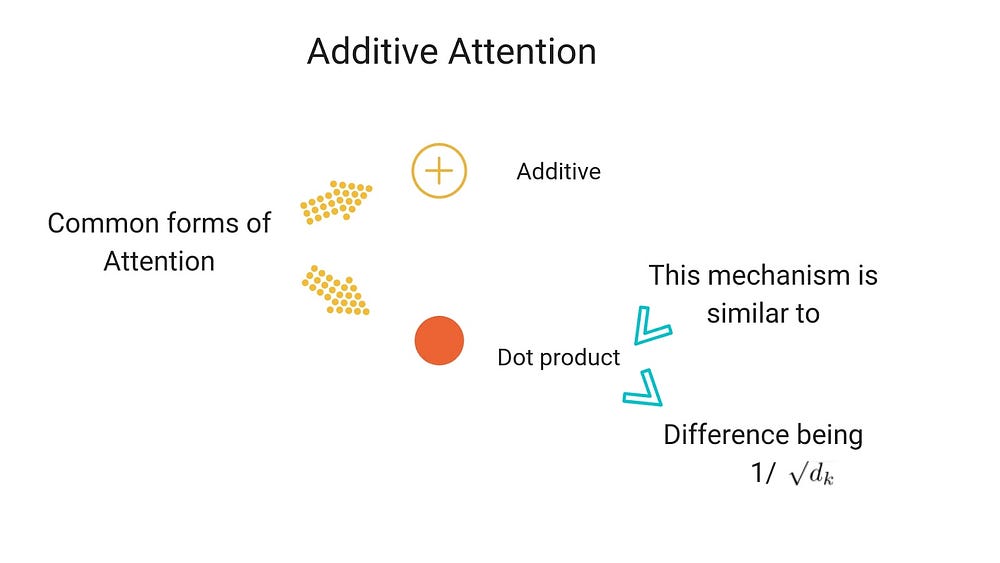

Attention And Its Different Forms By Anusha Lihala Towards Data Science

How Transformers Work In Deep Learning And Nlp An Intuitive Introduction Ai Summer

Python Self Attention Process Of Deep Learning Qkv

Introduction Fatmah Ebrahim Traffic Jam Facts There Are 500 000 Traffic Jams A Year That S 10 000 A Week Or A Day Traffic Congestion Costs Ppt Download

Self Attention和multi Head Attention的原理和实现 陈建驱的博客 Csdn博客 Multi Head Self Attention

Intuition Behind The Use Of Multiple Attention Heads Cross Validated

Paper Reading Attention Is All You Need

Paper Reading Attention Is All You Need

What Does Qkv Mean Definition Of Qkv Qkv Stands For Quaking Viable By Acronymsandslang Com

Qkv Osaka Japan

Reach And Limits Of The Supermassive Model Gpt 3

Applied Sciences Free Full Text Information Extraction From Electronic Medical Records Using Multitask Recurrent Neural Network With Contextual Word Embedding Html

Q Link Opbouw Wandcontactdoos Ra Kv 3 Voudig Zwart Installand

Berker Q 1 Q 3 Wandcontactdoos Inbouwinstallatie Ra 2v Kv Wit Rexel Elektrotechnische Groothandel

Microsoft Deepspeed Achieves The Fastest Bert Training Time Deepspeed

Self Attention Mechanisms In Natural Language Processing Dzone Ai

Transformer 李宏毅深度學習 Hackmd

Stalen Deuren Binnenwerck Interieur

Nlp学习 5 Attention Self Attention Seq2seq Transformer 吱吱了了 博客园

Transformers Visual Guide An Attempt To Understand Transformers By Mayur Jain Artificial Intelligence In Plain English

Understanding Q K V In Transformer Self Attention By Mustafac Analytics Vidhya Medium

Attention And Its Different Forms By Anusha Lihala Towards Data Science

Transformer Models In Nlp Laptrinhx

Self Attention Mechanisms In Natural Language Processing Dzone Ai

Mamakass Throwback 15 Andrea Nardinocchi Qkv From

Transformer

Illustrated Guide To Transformers Step By Step Explanation By Michael Phi Towards Data Science

Transformer Models In Nlp Laptrinhx

深度学习attention机制中的q K V分别是从哪来的 知乎

Attention Dropout Explained Papers With Code

:quality(40)/f.eu1.jwwb.nl%2Fpublic%2Fq%2Fk%2Fv%2Ftemp-zyddgcchuqhitorksrst%2Fhdehjz%2Foorbellen10062-1.jpg)

Oorhangers Met Grote Blauwe Kralen Feel Funky